#3 NVIDIA Jetson AGX Orin Review (Part 1/3)

A Real-World Analysis

GPUDEFENCENVIDIAVISIONSTARTUP

At AHOMLAMA, we spend a lot of time evaluating technologies that can genuinely push the limits of what’s possible in AI and edge computing. Recently, we got our hands on the NVIDIA Jetson AGX Orin, and it’s safe to say, this little machine has left a big impression.

When you first hold it, the Orin looks like a compact metal brick, small enough to fit in your palm. But under that modest exterior lies a computing powerhouse built to handle the most demanding AI workloads, all without needing a data center or an internet connection.

What Is the Jetson AGX Orin?

Launched in 2023, the NVIDIA Jetson AGX Orin is one of the most powerful embedded AI computing platforms in the world. Designed for developers, researchers, and businesses building real-time AI solutions, it combines the portability of a small form factor with the raw performance of a high-end GPU.

Here’s what stands out on paper:

Performance – Up to 275 trillion operations per second (TOPS), which is 8× faster than the previous generation.

GPU – 2048 CUDA cores and 64 Tensor cores for high-throughput AI computation.

Power Efficiency – All that performance in a 60W power envelope, making it deployable in places where heat and power are limited.

The combination of CUDA cores (optimized for parallel processing) and Tensor cores (built for deep learning acceleration) means it can handle everything from lightweight neural networks to large, complex AI models, all on the edge.

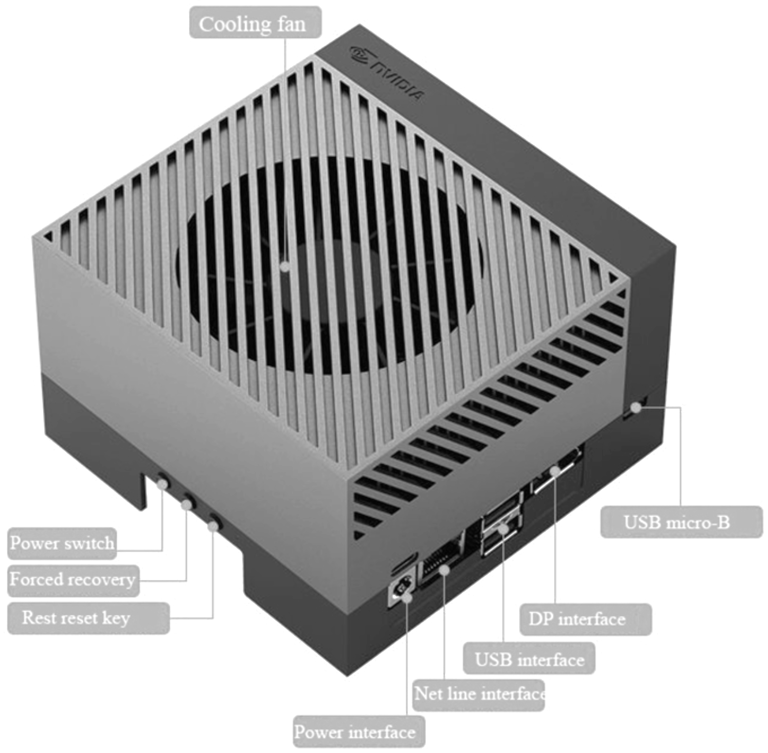

Connectivity: Designed for Real-World Projects

One of the first things we noticed during setup was how developer-friendly the Orin is. The board comes with a wide variety of ports and interfaces that make it plug-and-play for almost any type of AI application.

USB Ports – Connected a keyboard, mouse, and external SSD without a hiccup. File transfers were quick, with no noticeable bottlenecks.

Ethernet Port – Provided a rock-solid wired connection for downloading updates, large datasets, and pushing code to the device.

HDMI Output – Easily hooked it up to a 4K monitor for real-time visualization of model outputs.

Camera Interfaces – Tested with a high-resolution camera; it integrated seamlessly, making it perfect for computer vision use cases.

Simple Power Connector – Plug it in, power up, and you’re operational, no complicated wiring or assembly needed.

Putting It to the Test: Performance in the Real-World

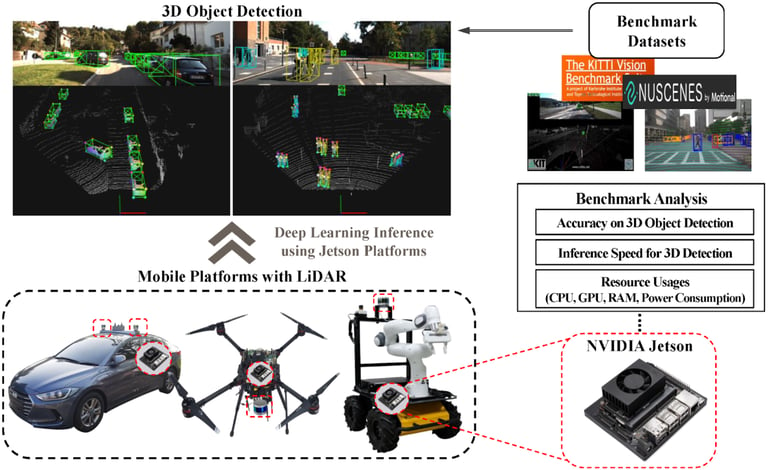

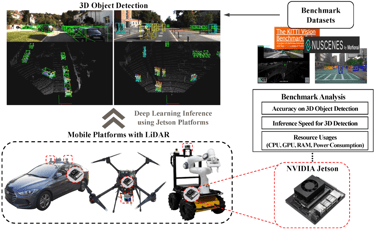

We ran the Orin through multiple test scenarios to see how it would handle real workloads. The results were consistently impressive. Aided by the research using benchmarks as seen in [1].

Live Video Analysis

We connected a camera and ran object detection models in real time. The Orin was able to identify people, animals and moving vehicles, with a frame-to-frame latency under 25 milliseconds. That’s the kind of responsiveness you need for mission-critical applications like surveillance-reconnaissance drones, industrial inspection, or autonomous vehicles.

Image Recognition at Scale

Using its Tensor cores, the Orin processed over 200 images per second with strong accuracy. This is critical for use cases like large-scale satellite imagery analysis or automated quality control in manufacturing.

Fully Offline AI

Perhaps the most game-changing feature for us is that the Orin does all of this without needing the cloud. We ran multiple AI models, from image recognition to voice processing (entirely offline), and they performed flawlessly. This has enormous implications for projects that require low latency, high privacy, or operation in bandwidth-limited environments.

Multi-Tasking Without Breaking a Sweat

In one test, we ran live video analysis and voice recognition simultaneously. The Orin handled both smoothly while using less than 50% of its available processing power. This means developers can stack multiple AI workloads together and still have headroom for more.

[1]

Why the Jetson AGX Orin Stands Out

The Orin sets itself apart in three ways:

Raw Power in a Small Package – You’re getting workstation-grade performance in something the size of your palm.

Developer Experience – Thanks to NVIDIA’s JetPack SDK, you can go from unboxing to running a functional AI demo in a matter of hours. The SDK comes loaded with drivers, pre-trained models, and optimization tools, making it easy to hit the ground running.

True Edge AI – Processing everything locally means faster results, no dependency on external servers, and tighter control over data privacy. When you can get inference results in under 10 ms, there’s simply no reason to wait for the cloud.

Who Should Use It?

AI Developers building smart cameras, inspection systems, autonomous drones, or advanced robotics.

Students and Researchers who want a hands-on platform for exploring deep learning and edge AI without relying on cloud GPUs.

Businesses adding AI to products for retail analytics, predictive maintenance, automation, or security systems.

Final Verdict

We at AHOMLAMA are already integrating the Orin AGX into projects we build. The NVIDIA Jetson AGX Orin is more than just powerful hardware, it’s a platform that allows developers to go from concept to deployment without compromise. Orin delivers the perfect balance of performance, efficiency, and flexibility. For us at AHOMLAMA, it’s not just another dev board, it’s a core part of our toolkit for building fast, private, and deployable AI solutions.

#AI #NVIDIA #AHOMLAMA #JetsonAGXOrin #EdgeComputing #MachineLearning #Robotics #Innovation #TechJourney #GenerativeAI #EdgeAI #BuildCoolTech

References

[1] Choe, Chungjae & Choe, Minjae & Jung, Sungwook. (2023). Run Your 3D Object Detector on NVIDIA Jetson Platforms:A Benchmark Analysis. Sensors. 23. 4005. 10.3390/s23084005.