#4 NVIDIA Jetson AGX Orin, Hardware Deep Dive (Part 2/3)

A Real-World Analysis Continued..

NVIDIAVISIONSTARTUPDEFENCEGPU

As edge AI accelerates elements of everyday life, autonomous vehicles, smart cameras, robotics, and the industrial IoT, the need for compact yet powerful compute platforms has reached new heights. NVIDIA’s Jetson series has consistently responded to this demand. From the original Tegra-powered Jetson TK1 to the breakthrough Xavier platform, each generation has meaningfully advanced the state of embedded AI. As reviewed in the first part of this series, the Jetson AGX Orin series marks a structural shift, delivering data-center class AI in the familiar, developer-friendly system-on-module (SOM) form factor.

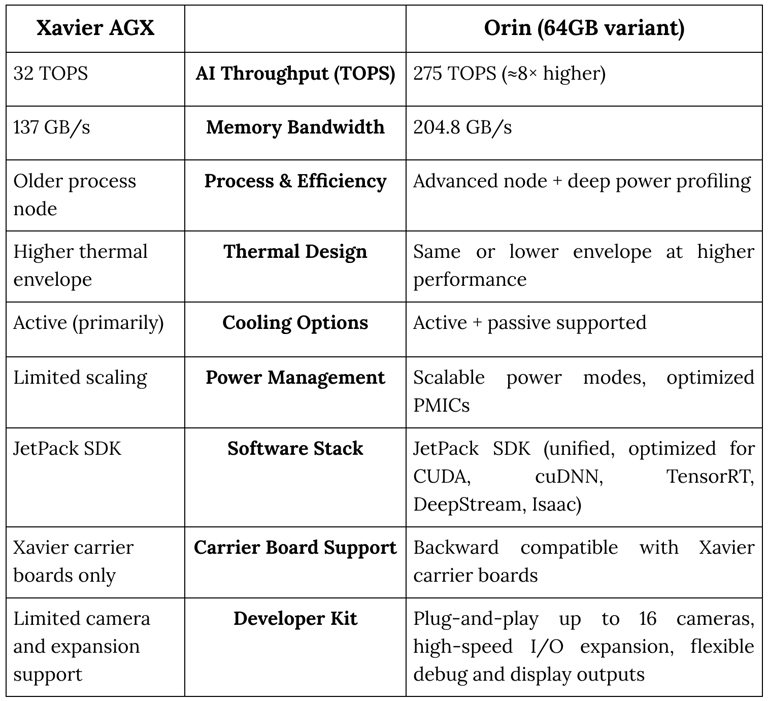

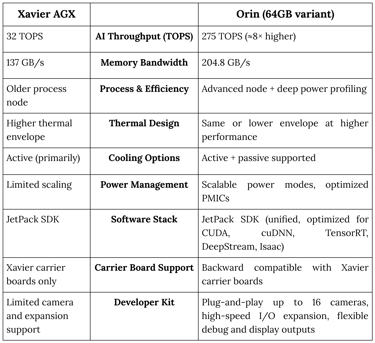

A key concern for developers and integrators is how Orin improves and disrupts, the Jetson Xavier era:

Orin Vs The Predecessors - Performance and Usability Comparison

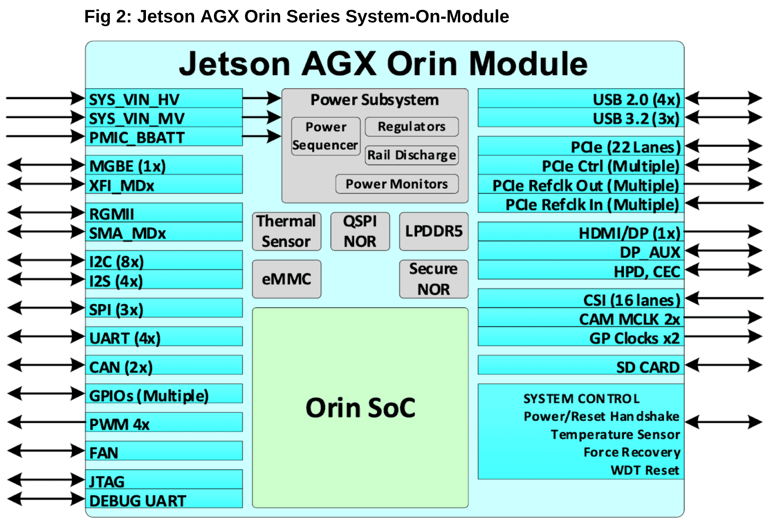

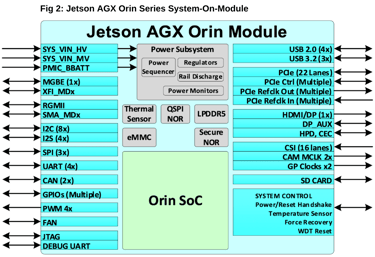

1. Compact System-on-Module Approach

Orin’s SOM integrates CPU, GPU, memory subsystems, and I/O within a 100 × 87 mm package. This architectural consolidation means fewer PCB traces, shorter data pipelines, and less complexity for developers. The Orin series remains pin-compatible with Jetson AGX Xavier, supporting drop-in upgrades or phased transitions in production environments.

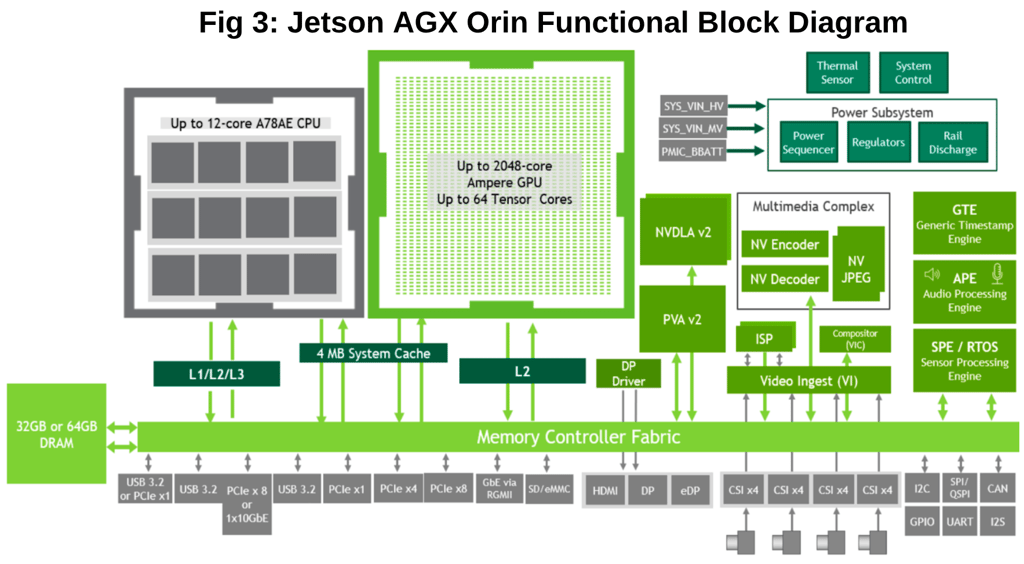

2. Compute Architecture Upgrades

Orin’s compute foundation is built on several complementary engines:

Ampere GPU: With up to 2048 CUDA cores and 64 Tensor Cores (in the 64GB variant), AI workloads run dramatically faster and more efficiently, particularly models leveraging mixed-precision (FP16/INT8/INT4).

Arm Cortex-A78AE CPUs: Up to twelve CPU cores, operating at up to 2.2GHz and featuring cutting-edge out-of-order branch prediction, dual-ISS, and advanced security (ECC, RAS), enable Orin to process multi-threaded data and real-time system tasks concurrently.

Next-Generation NVDLA: Dual accelerators handle inferencing for high-throughput neural networks, maximizing power efficiency further than Xavier’s implementation.

3. Memory and Storage

Orin pushes bandwidth boundaries with up to 64GB LPDDR5 and 204.8 GB/s bandwidth, twice that of Xavier, ensuring seamless support for multiple high-resolution sensors and real-time AI. With 64GB eMMC 5.1 storage, Orin doubles Xavier’s capacity, supporting extensive logging and offline datasets.

4. I/O, Peripherals, and Camera Support

Orin is engineered for multi-modal edge AI. The SOM exposes a vast array of high-speed interfaces: PCIe Gen4 (twice the speed of Gen3), USB 3.2 Gen2, multiple camera links (MIPI CSI-2 with up to 16 virtual channels), DisplayPort 1.4a, HDMI 2.1, and a full complement of GPIO, CAN, I2C, SPI, and others. Real-world applications can draw from an extensive sensor suite, LiDAR, cameras, radar, simultaneously, without bottleneck.

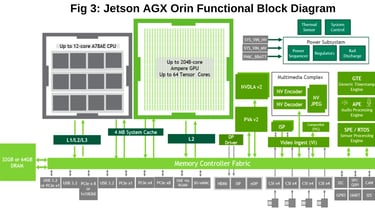

Detailed Orin Hardware Architecture and Data Flow

With edge AI, data often flows from multiple sources,cameras, microphones, network feeds,requiring versatile yet predictable data handling.

Orin’s Data Orchestration

At the heart of Orin’s module is a unified memory controller that governs access between the CPU, GPU, DLAs, visual processing accelerators (VIC/PVA), and other subsystems. This architecture ensures that concurrent workloads,such as perception, control, and user interface,each have guaranteed, low-latency access to DRAM and I/O without deadlock or starvation.

Central Data Switch: All compute and vision accelerators communicate via a high-throughput crossbar, ensuring rapid, parallel operations. Data from multiple vision streams, lidar packets, or time-of-flight sensors enter via camera interfaces or PCIe and are rapidly redirected to the optimal engine,whether that’s Tegra’s GPU for deep learning, the NVDLA for inferencing, or the PVA/VIC for preprocessing/compression.

I/O Expansion: Multiple layers of I/O,including parallel PCIe buses, USB controllers, and network interfaces,allow high-bandwidth expansion and real-time, deterministic response for safety-critical systems (e.g., robotics, drones, autonomous transport).

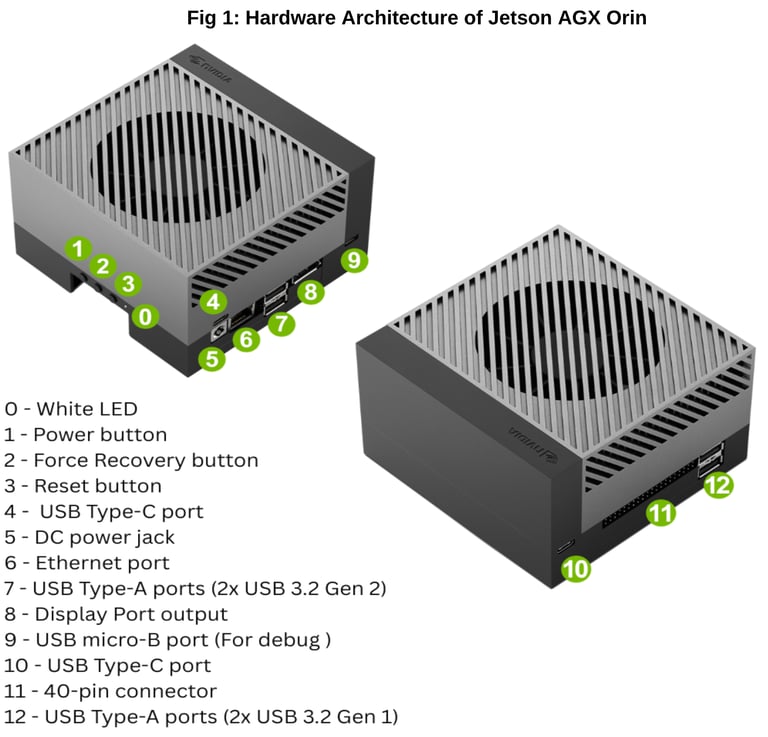

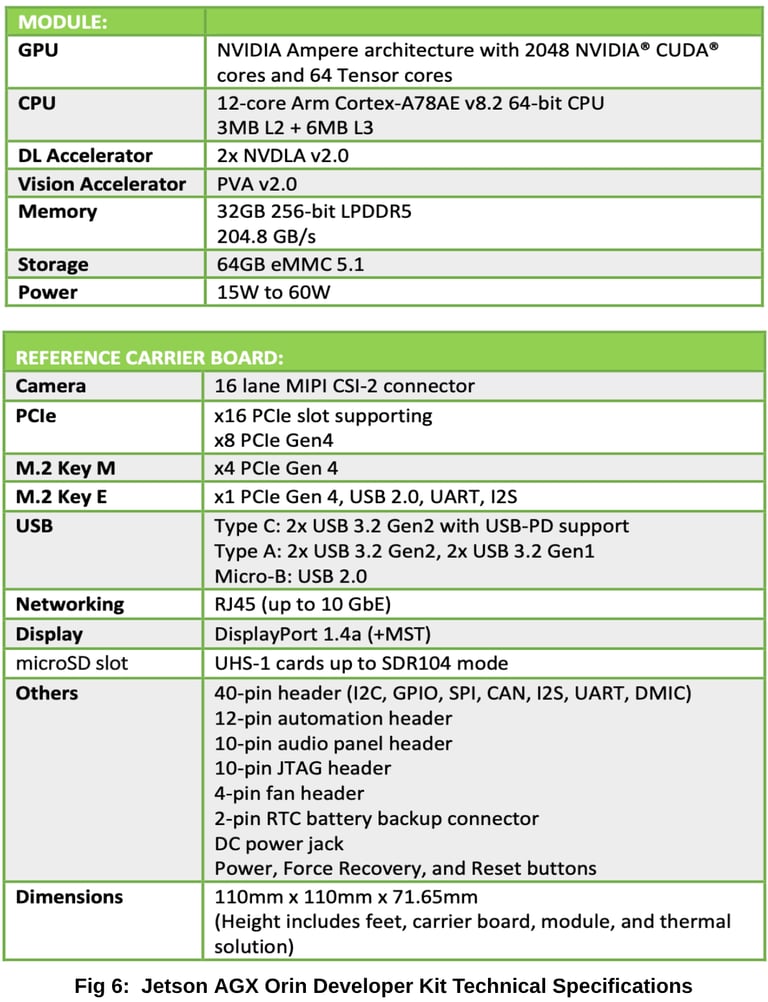

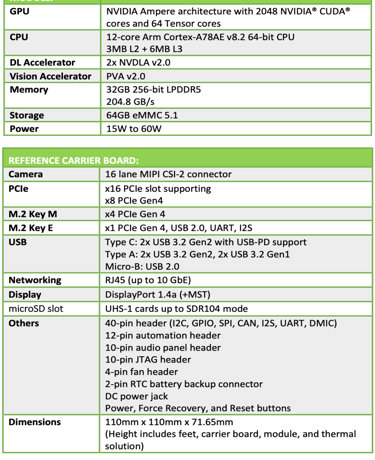

Developer Kit,Specifications and Expanded Features

To accelerate development, evaluation, and field trials, NVIDIA pairs the Orin SOM with a highly featured developer kit carrier board.

Technical Specifications

GPU: NVIDIA Ampere, 2048 CUDA, 64 Tensor Cores (64GB variant)

CPU: 12-core Arm Cortex-A78AE, advanced caching for high-throughput offload

Deep Learning Accelerators: Dual NVDLA v2.0 for sustained INT8 inferencing

Vision Accelerators: Programmable vision (PVA v2.0), scalable video image compositor (VIC)

Memory: 32GB/64GB LPDDR5, ultra-high bandwidth

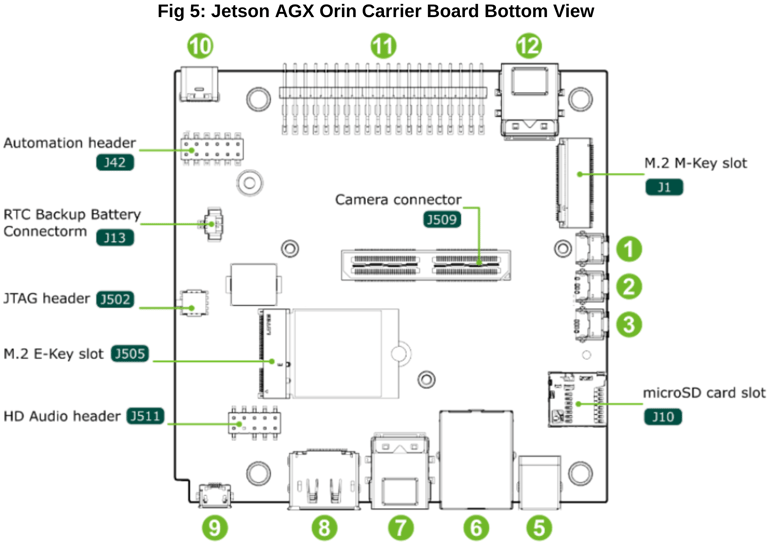

Storage: 64GB eMMC, plus M.2 expansion for NVMe SSDs

Power: User-selectable 15–60W power envelopes, supporting mobile and fixed installations

Camera: Support for multi-lane, multi-module MIPI CSI-2, up to 8 physical/16 virtual cameras

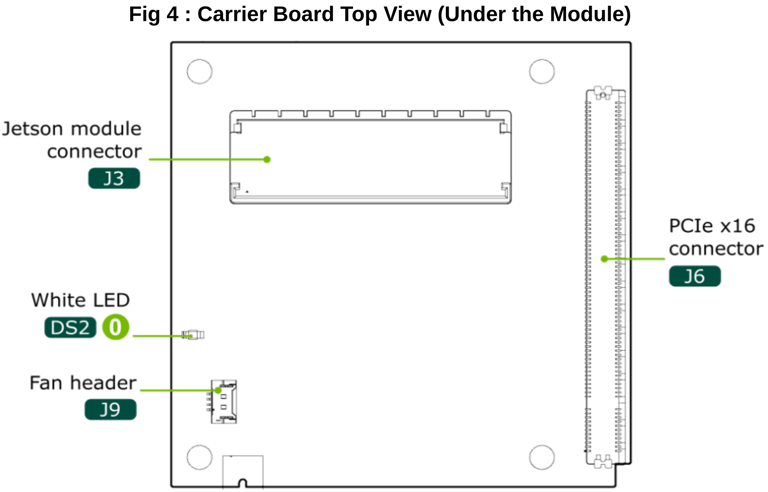

Expansion: PCIe Gen4 x16 slot, M.2 Key M (NVMe x4), M.2 Key E for wireless modules

Networking: RJ45 Ethernet up to 10 GbE

USB: Multiple USB 3.2 Gen2 (Type-A and Type-C with power delivery), USB Micro-B for device/debug

Display: DisplayPort 1.4a, support for 8K/60Hz, HDMI 2.1

Connectivity and Expansion

Accessory headers include 40-pin expansions for I2C, GPIO, CAN, as well as dedicated interfaces for automation systems and JTAG debugging. Hardware buttons (power, reset, force recovery) and status LEDs simplify embedded development.

1. Robotics and Autonomous Machines

Orin’s parallel AI and classical compute abilities support sensor fusion, path planning, SLAM, and vision at speeds previously confined to dedicated desktop-class GPUs. Industrial robots, drones, and AMRs now operate with higher autonomy, richer perception, and faster response.

2. Video Analytics at the Edge

With support for multi-4K, high-framerate video ingestion, pre-processing, encoding, and AI inference, Orin transforms smart cameras, NVRs, and city grading systems. Onboard vision accelerators process object detection, facial recognition, and event-based analytics in real time.

3. Healthcare and Smart Infrastructure

Orin’s reliable, low-latency platform enables AI-powered ultrasound, smart patient monitors, and hospital logistics robots, ensuring compliance with medical device standards through robust downtime and recovery controls.

4. Research and Custom Embedded Solutions

Academics and enterprises tap Orin for rapid prototyping, allowing proof-of-concept models to migrate quickly to production hardware. The software ecosystem and extensive documentation minimize the learning curve and accelerate innovation.

Energy-efficient operation, modular upgradability, and broad accessory compatibility ensure that investments in Orin today will remain relevant and performant as new sensors, models, and frameworks arrive in the years ahead.

Real-World Applications and Future-Proofing with Orin

The NVIDIA Jetson AGX Orin unequivocally sets the new standard for edge AI, fusing server-level computing, high-speed vision, and modular design into an accessible platform for engineers, OEMs, and innovators. Upgrading from earlier Jetsons or starting afresh, Orin future-proofs your vision,supporting rapid prototyping through to scaled production, in domains as diverse as robotics, healthcare, security, and smart cities. Orin stands not merely as another hardware iteration, but as a cornerstone for a new era in decentralized AI, providing limitless performance headroom for the intelligent systems of tomorrow.

Conclusion

#AI #NVIDIA #AHOMLAMA #JetsonAGXOrin #EdgeComputing #MachineLearning #Robotics #Innovation #TechJourney #GenerativeAI #EdgeAI #BuildCoolTech